Innovazione

Nvidia is not able to correctly forecast when they will deliver their technology

Nvidia announced on Wednesday with its quarterly results that AI is now at a tipping point. Because I am (too) sceptical, I thought I would look back to one of the previous tipping points they promised, i.e. autonomous vehicles, to see how they have performed and therefore how valid their predictions might be.

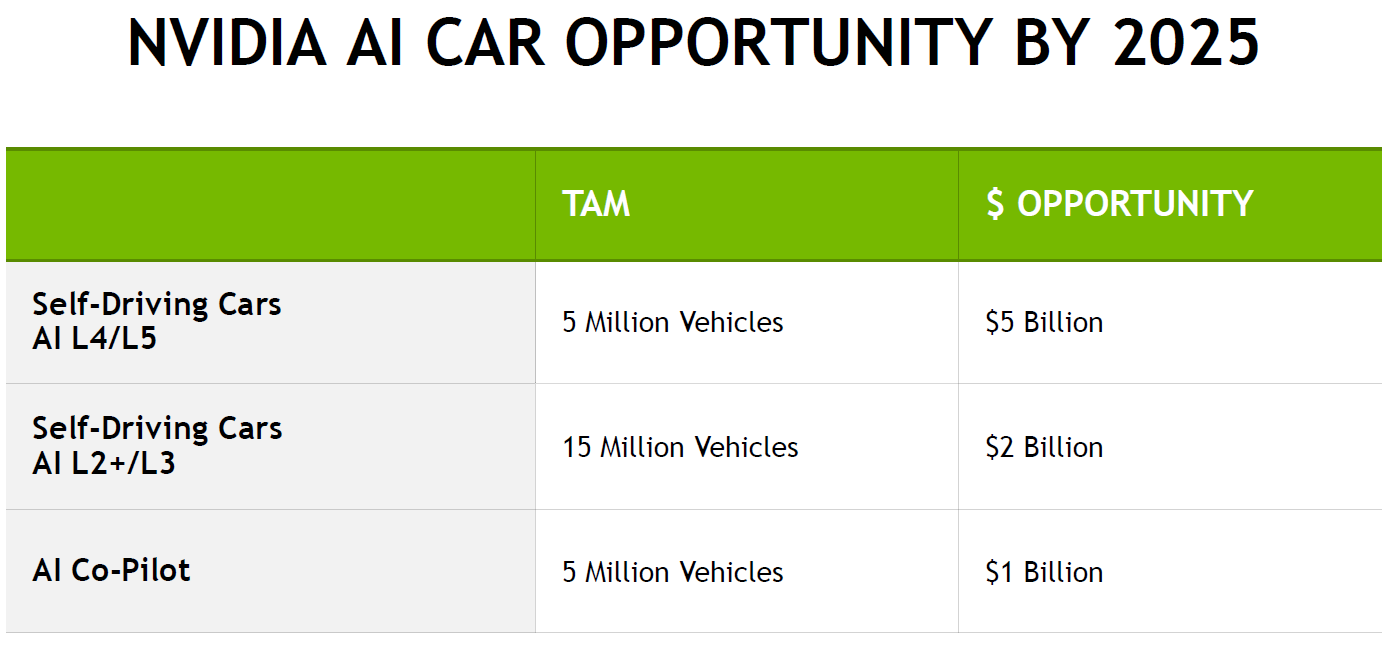

in 2017 they were promising great things for self-driving cars:

But their revenues for automotive in 2023 were $1.1bln. This might excusable if prices had dropped or the public had decided not to adopt the technology but it essentially isn’t ready. We are not anywhere close to seeing mass adoption of L2+/L3, L4 is looking like being several years away and L5 just looks harder than people imagined. This suggests that Nvidia are not able to correctly forecast when they will deliver their technology and how difficult it is to deliver.

The fact that companies have been unable to deliver a self/autonomous-driving solution despite driving being a much more defined environment than general intelligence, i.e. the rules for driving are encapsulated in a roughly 200 page book with the mechanics of the car clearly known, makes me think that promises of general intelligence on any reasonable timescale are likely to be massively over-promised. There are no nebulous aesthetics, no grey areas where moral judgements might need to be applied in driving, the car should be programmed to drive from point A to point B while respecting the rules of the road. And yet we seem to still be a long way (10 year +?) from L5 autonomous driving being realistic and widely rolled out.

Apart from the evidence of their previous failure, I have a couple of philosophical arguments which also make me think the current approach to making a machine intelligent and self-conscious of its mistakes, as opposed to making it a good tool which will always need human supervision, is wrong.

The first is the way that intelligence has evolved naturally. We can see how intelligence develops and has evolved by looking at animals. Some have a memory, some a theory of mind, emotions, language are all present to varying degrees and become more advanced as evolution proceeds. Think of the gorilla able to express themselves akin to a seven year old via sign language or how a dog can communicate when it needs to go out or its satisfaction at getting attention. Humanity seems to be at the top in terms of intelligence but possibly only because we don’t understand yet how whales’ brains work and their communication. Machine learning seems to be far too narrowly focused as it is currently being developed to focus on predicting meaning and what we want rather than understanding it and acting with agency to be able to ever become something more than a tool where we will have to judge the outcome. Will it need to incorporate drive, emotion, fear of death, morality and all the facets of being and personality that make creatures alive before it can achieve intelligence? And if it does then the ethical issues we have confronted so far will pale in comparison with having an entity which is generally intelligent and silicon based.

The other aspect of machine learning which makes me think the architects have it wrong is the need for more data to improve the models. When the model consists of practically all of the cultural output of humanity and you still need more data, it tends to make me think the model is not set up to learn in a way which would resemble anything we would call intelligence. It is rote-learning on a grand scale.

Machine learning as it is currently being developed will be a useful tool but it is much better to refer to it this way than as intelligence.

Devi fare login per commentare

Accedi